São Paulo, 15 December 2020 (PAHO/WHO)—The series of six webinars “Strengthening health information networks” that had as target audience the networks of the Virtual Health Library (VHL) and Libraries of the Unified Health System (BiblioSUS) started in June this year. The first three webinars focused on bibliographic control and the role of the Network as a disseminator and intermediary of scientific knowledge in health.

The following three webinars, in turn, dealt with scholarly communication topics within the scope of the VHL information sources. The last topic, presented on October 14, refers to the Evaluation of science and impact indexes, and was presented by two professionals, Lilian Calò, Coordinator of Scientific and Institutional Communication at BIREME and Jaider Ochoa- Gutiérrez, Professor at the Inter-American School of Library Science, Universidad de Antioquia, Medellín, Colombia.

Lilian started contextualizing the need to evaluate science in order to develop public policies, promote relevant research, measure the performance of postgraduate programs and hire and promote researchers according to the merit of their research work. The impact of science, however, cannot be measured in a short time, but the impact indicators of scientific publications, usually based on citations, are processed in 2 to 3 years. There are many scientific impact indices, and the most well-known of them, the Impact Factor, inspired a series of other very similar, but competing indices, such as SCImago Journal Rank, CiteScore, and others.

The use of impact indicators is not simple, and its interpretation requires knowledge of its calculation and the database used to count citations. A fact, still, seems to have become a consensus since the first years of the 21st century, that the impact indexes based on citations were created to evaluate journals, but should not be used to evaluate researchers in hiring processes or career progression, as advocates the San Francisco Declaration on Research Assessment[1] e the Leiden Manifesto[2]. A measure of excellence of scientific journals is their indexing in thematic (for example, LILACS, MEDLINE, PubMed) or multidisciplinary databases (Web of Science, SciELO, Scopus, among others), because of their rather strict criteria for admission and permanence.

Complementing the traditional indexes based on citations, there are also use and download measurements, which serve to assess how much articles are accessed and read, without yet being cited. Calò also mentioned the metrics based on social networks, which are gathered in the Altmetrics index.

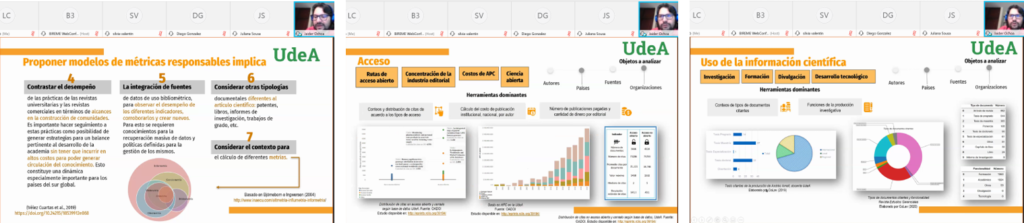

Next, Jaider Ochoa Gutierrez presented the scenario for the evolution of Latin American and Caribbean science through responsible metrics. According to Ochoa, despite the multiple supply of metrics – usually based on citations – the assessment of science, dimensionally speaking, remains problematic. Citations, ponders Ochoa, are traditionally associated with journal articles and are unaware of other types of documents, such as books, research reports, patents, undergraduate work, etc. In addition, disciplinary differences in modes of production and assessment of knowledge production in different languages must be considered.

In proposing responsible metrics, Ochoa clarifies that this implies participating in a cooperative assessment exercise in which open science plays a key role. Moreover, criteria must be sought to measure the different forms of performance and impact – scientific, social, economic and political. Not only that, but also integrating data sources for bibliometric use and measuring the impact of different types of documents. Ochoa shows that forms of access to documents, open access routes, APC costs and many other variables that are not normally considered in bibliometric analyzes should also be considered. The importance of creating and using responsible metrics lies in the fact that research is evidenced as a state-financed asset and, therefore, it is necessary to know or estimate its impact.

[1] The Declaration on Research Assessment (DORA) recognizes the need to improve the ways of evaluating the results of academic research. The statement was developed in 2012 during the Annual Meeting of the American Society for Cell Biology in San Francisco and has become a global initiative covering all academic disciplines and all stakeholders, including research institutions, funding agencies, publishers, scientific societies, and researchers. https://sfdora.org/

[2] Leiden Manifesto for research metrics http://www.leidenmanifesto.org/